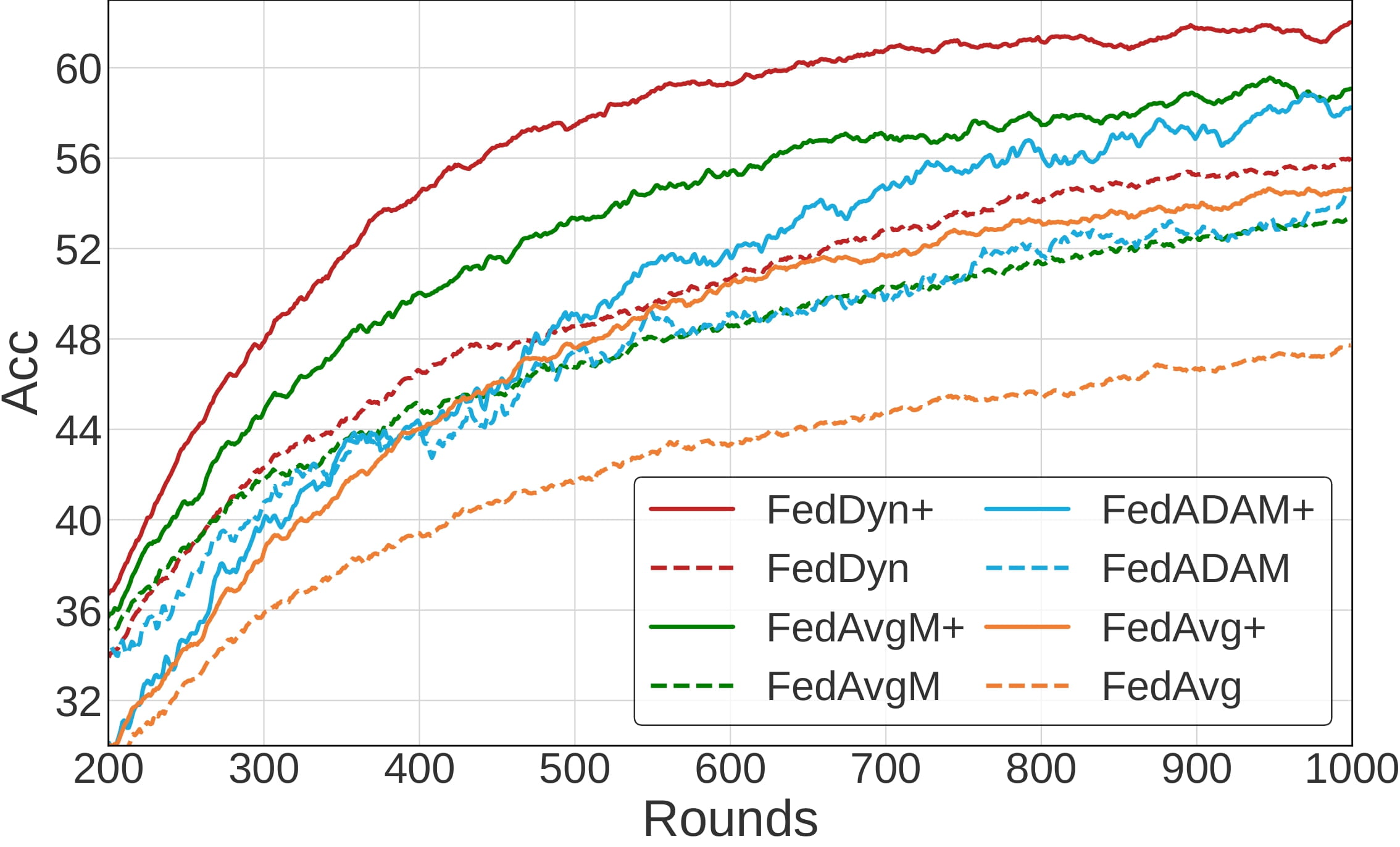

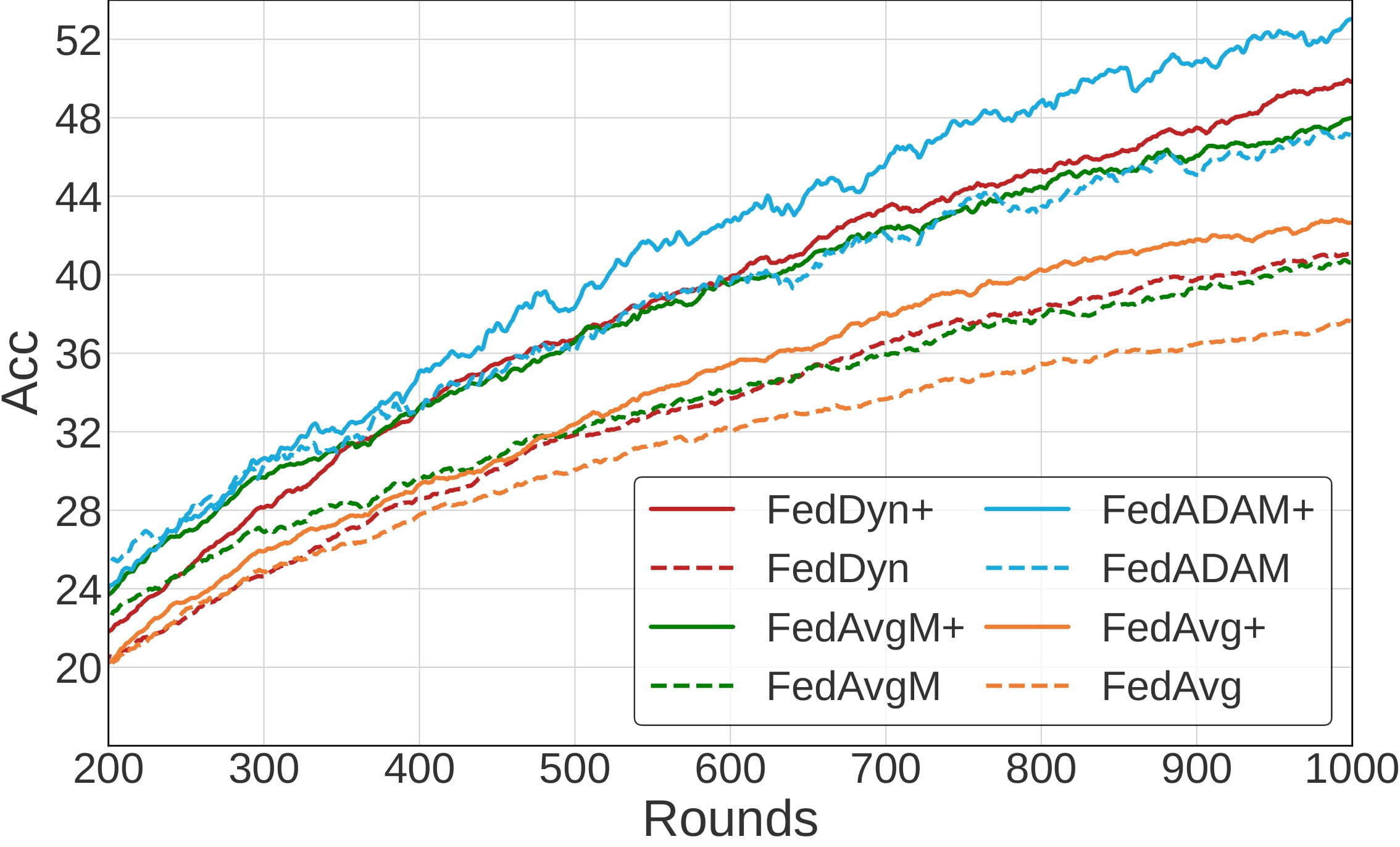

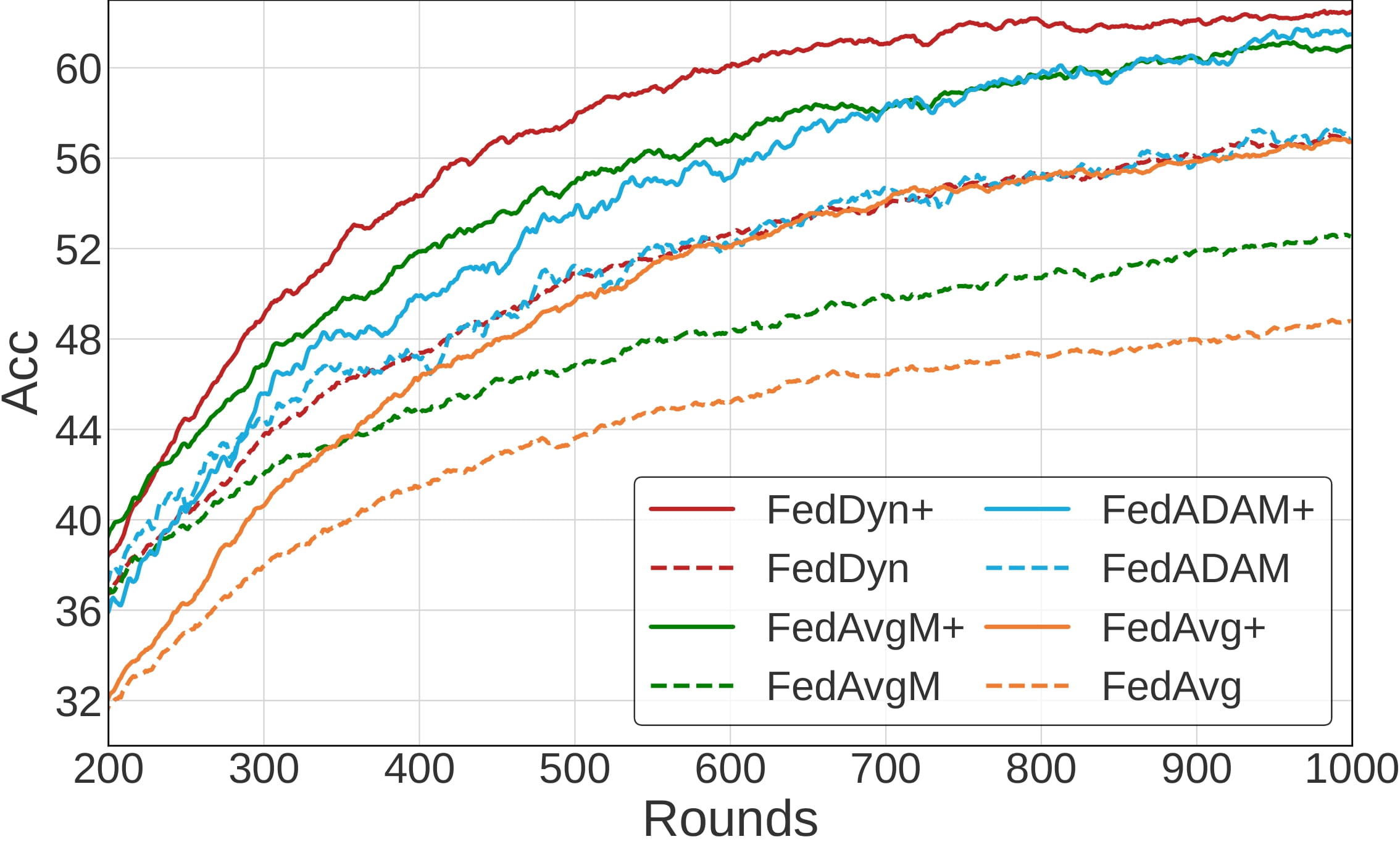

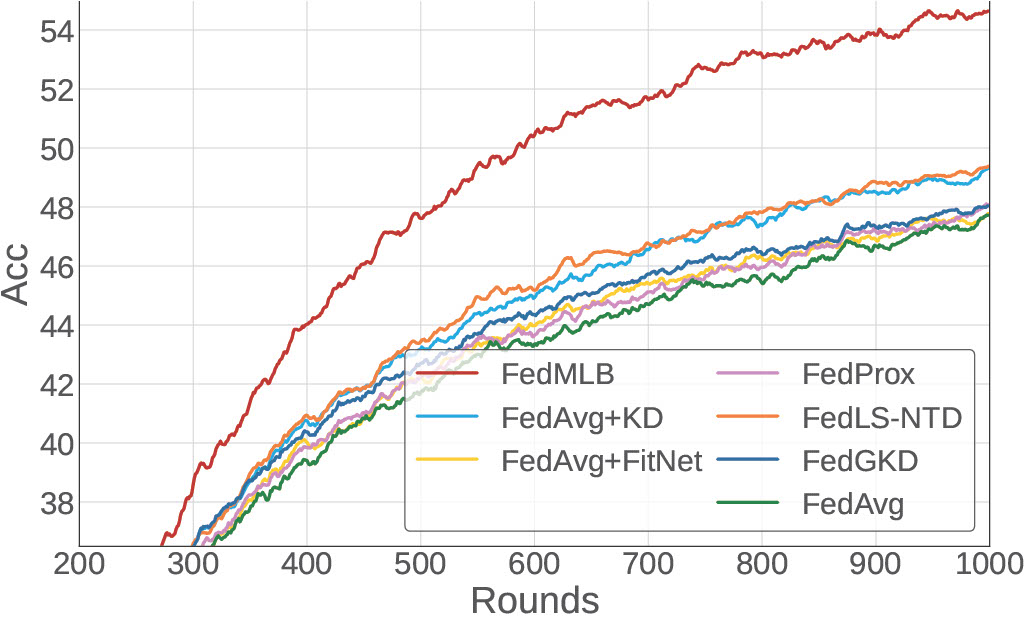

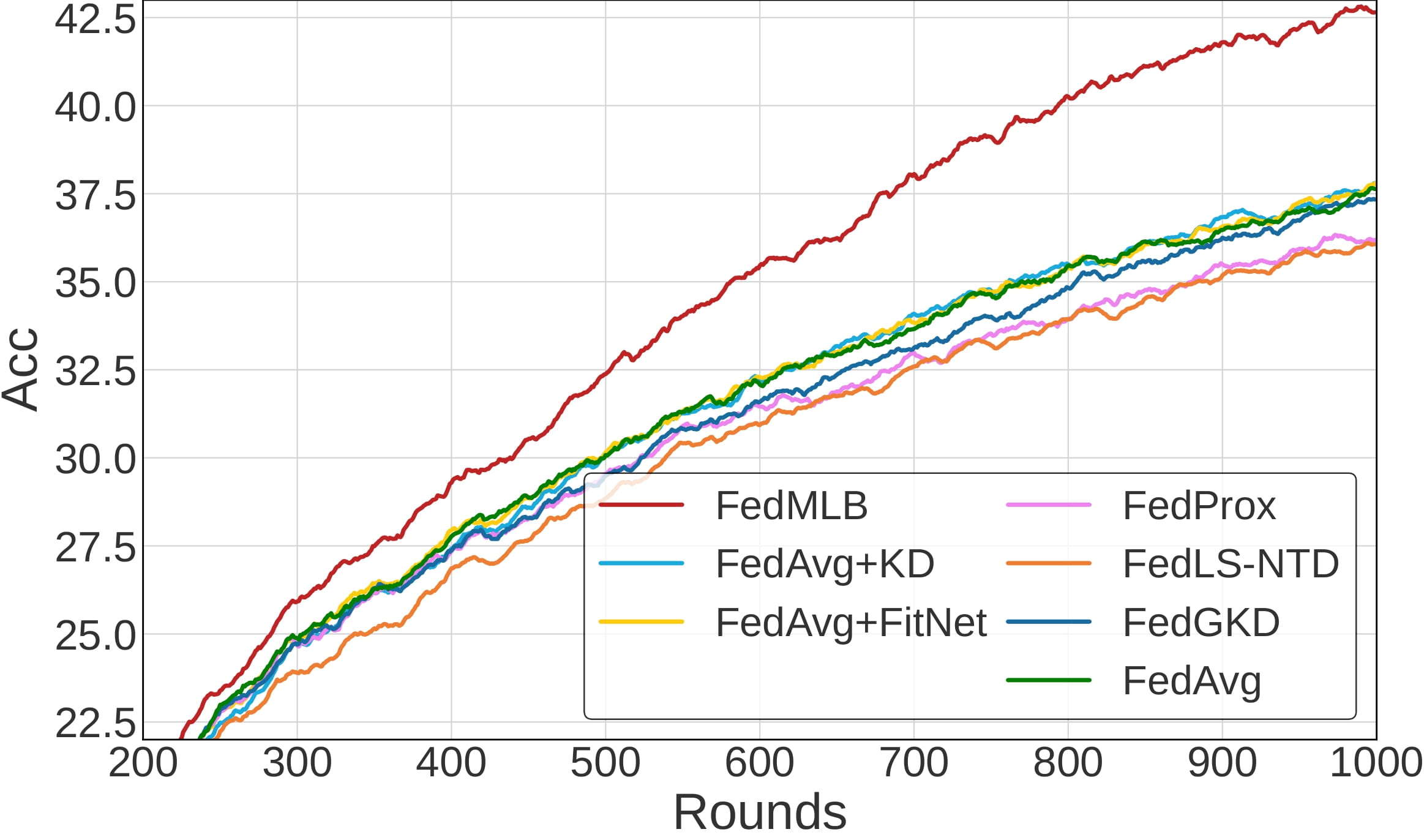

We notice that FedMLB continuously outperforms the baselines large margins even when we increase the number of local iterations, which is effective for reducing the communication cost.

Citation

@inproceedings{kim2022multi,

author = {Kim, Jinkyu and Kim, Geeho and Han, Bohyung},

title = {Multi-Level Branched Regularization for Federated Learning},

booktitle = {ICML}

year = {2022}

}